how to not index a url

or how depending on robots meta tag specifications might kill you rankings

by Valentin Pletzer - November 20, 2018 (updated: March 5, 2021)

<meta name="robot" content="noindex" /> did you spot it? What looks like an error (and in fact you might argue it is) is actually accepted by Google and will exclude your HTML page from being listed on the search result page. A fellow SEO (@termfrequenz) did discover this oddity after wondering why a page didn’t show in the Google search results but was recognized as indexable by the popular browser plugin Seerobots. As it turns out: Google accepts robot (singular) as well as robots (plural).

Don’t get me wrong: I really love Seerobots. It has a great UI and is really lightweight but it has an issue with pages which don’t follow the robots meta tag specifications to the letter - literally. And Seerobots is not alone. Most of the crawlers out there, and all of which I tried, have a discrepancy to the way Google handles issues related to the robots meta tag and the X-Robots-Tag http header.

a growing list of edge cases

After the initial report about the “robot”-discovery I thought: Maybe this isn’t the only edge case out there and as it turns out there are a lot of them. First stop: the Google help pages which I would recommend every webmaster and SEO to read. The Google documentation more or less sticks to the initial notes which were agreed on in 1996 at the Distributed Indexing/Searching Workshop by attendees from Excite, InfoSeek, and Lycos.

If a webpages sticks to the directives documented on the Google help pages and the original notes there are many tools to tell you if your page is eligible for indexing or not. But the moment you stray of the beaten paths (by accident or not) you might find a lot of curious cases.

All in all I did document a list of different noindex-implementations, eight of which I did test with several known crawling tools and services in November 2018. (see Google Docs)

Google tries to help you

Since Google does not document the behaviour properly, there is no way to be sure. But it looks like Google tries to honor your wishes even if you did not stick to the specifications.

- misspelling of noindex

-

<meta name="robots" content="noindexa, follow" />

Some tools run a simple string search on the robots instructions and this will lead to false discovery of a noindex-instruction. - value not content

-

<meta name="robots" value="noindex, follow" />

According to the specifications, the correct attribute for directives is content, but Google does in fact honor the attribute value, which is a common attribute in meta tags as well. - value and content

-

<meta name="robots" value="noindex, follow" content="index, follow" />

If content and value attributes used, Google will prefer content. - no comma

-

<meta name="robots" content="noindex follow" />

Google will accept a space-seperated list as well. - an additional X-Robots-Tag HTTP header

-

<meta name="robots" content="index, follow" />X-Robots-Tag: noindex

An HTTP response with an X-Robots-Tag instructing crawlers not to index a page can be in direct contradiction to the meta tag on page. - only a X-Robots-Tag HTTP header

-

X-Robots-Tag: noindex

Instead of a HTML meta tag only a HTTP header can be used as well. - meta-googlebot-tag index,

x-robots-header noindex -

<meta name="googlebot" content="index, follow" />X-Robots-Tag: noindex

even so the HTML metatag is more specific the more restrictive HTTP header trumps - the X-Robots HTTP header

-

<meta name="robots" content="index, follow" />X-Robots: noindex

If the HTTP header is wrongly called X-Robots instead of X-Robots-Tag - a robot without s

-

<meta name="robot" content="noindex, follow" />

The specification define the global default as robots (with s) but Google also obeys instructions for robot (without s). - additional user-agent

-

<meta name="robots" content="index, follow" /><meta name="googlebot" content="noindex" />

The specification define the global default as robots which can be combined with a second meta tag specifically for Googlebot. - UPPERCASE

-

<META NAME="ROBOTS" CONTENT="NOINDEX, FOLLOW" />

Using uppercase doesn't really matter. Google correctly interprets the robots-directive. - noindex meta tag in body

-

…</head><body><meta name="robots" content="noindex, follow" />

Meta tags in the body of the HTML will not be ignored by Google. - unavailable_after meta tag

-

<meta name="robots" content="unavailable_after: 1 Jan 1970 00:00:00 GMT" />

The robots specification allows for a deprecation date in the meta tag but has no effect on index. - unavailable_after X-Robots-Tag

-

X-Robots-Tag: unavailable_after: Thu, 01 Jan 1970 00:00:00 GMT

Same as the html version, unavailable_after in http header has no effect on index as well. - canonical to noindex

-

"noindex", being the most restrictive rule, will take precedence over "index". A page, itself with index, pointing to a copy with noindex will be indexed never the less.

- iFrame in head

-

rendered HTML-Tags in the head (opposed to in the body) will break anything beyond that point. So if an iFrame would be placed in the head before the meta robots, the meta tag will be pushed into the body but will be used nevertheless.

- robots meta in body

-

Since all documentation refers to the meta tag robots in the head of the html the wrong assumption would be that a noindex in the body might be ignored.

- mix it all up

-

x-robots noindex, meta robots noindex, meta googlebot index

- noindex via Javascript

-

Some tools might not be able to detect a robots instruction which gets added only on rendered pages but Google does.

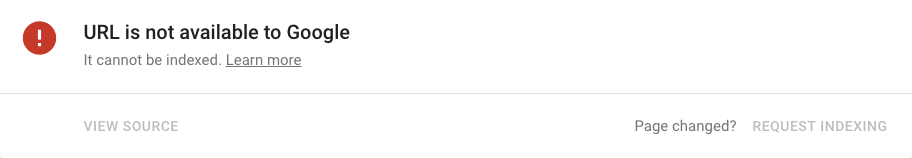

double check if you want to be sure

The new Google Search Console has a nifty feature called “URL inspect”. It basically allows you to look up if a page is already in the Google index or at least could be in the index. To the best of my knowledge it works exactly like the real indexer and therefore is the most reliable tool everyone should know.

After testing and documenting several cases I did reach out to all the vendors of known crawling tools - all of which were really helpful. Because, as it turns out, there is a difference between what URL Inspect reports and what the tools report. The most common explanation is one where the vendor sticks to the original robots specifications and not the Google interpretation because Google isn't the only search engine and Google might change it's behaviour without notice. And while this in itself is coherent given the fact that Google isn’t the only search engine out there, it provides an interesting dilemma with webmasters relying on Google.

So after some extensive testing and experimenting I would recommend one thing above all: do double check with the search engine you want your pages to be listed in and do not rely the specifications. And if you use a crawling service or tool, which I also would strongly recommend, you should know what the limits are.

recommendation

If you are serious about optimizing your webpage, you do need a tool to help you keep track of all the issues that a website can have. While my experiment did show a lot of interesting edge cases, there are far more common issues which arise day by day and a good tool can help you with. Find the tool which suits your use case the best and try to understand it’s strengths and limits.

This is a list of all the tools in my experiment and you might want to have a look at (in alphabetical order):

feedback welcome

If you like this article and my chrome extension, experience any problems, have ideas for upgrades or just want to say hi, feel free to contact me on Mastodon @VorticonCmdr.